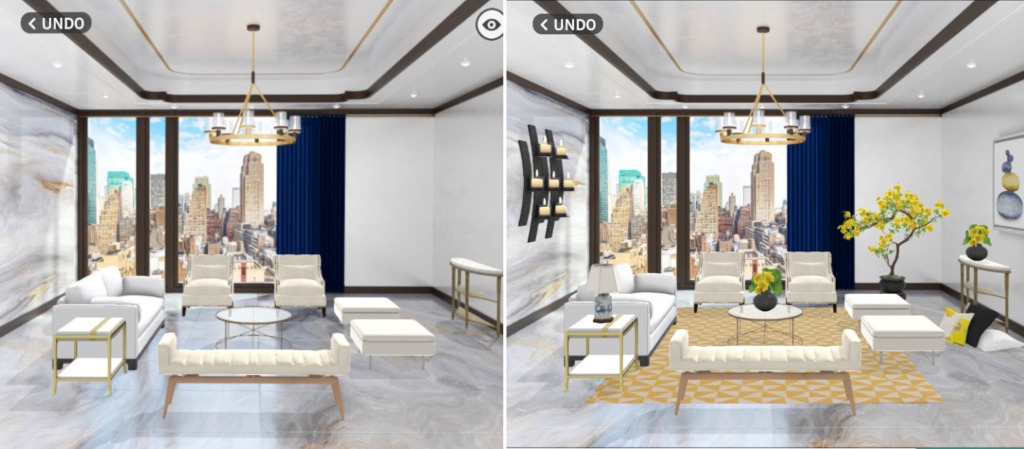

Funny story… so, a “friend” of mine may have a bit of an obsession with a particular app that allows a person to decorate rooms. It’s silly and leads this friend to zone out more than she probably should, but it’s one of her guilty pleasures. To play this game, one is giving a room with a bunch of bubbles that are to be filled with various pieces of furniture or other decorations. The blue ones *must* be filled to complete the room and the purple ones are optional. See exhibit A:

The thing is, without the optional things, the rooms always look drab. But with the things, they look straight out of a (lame and really generic) beautiful showroom! See:

However, many of the optional things require “diamonds” in the game, whereas most of the required things can be purchased with game “dollars.” Game dollars come pretty easily, the first room of the day will shell out $2,500 bucks and most rooms you finish add $500 to your pot. Diamonds though are more precious. You get 500 diamonds per day, but most wall art, for instance, costs at least that much and many are a thousand or more. However, one *can* spend real money to buy diamonds, which, one might do if they are really jonesing to fully decorate a room. Also, you get free furniture if you score high enough when people vote on your room, and let me tell you, no virtual tchotchkes, no virtual votes. So if a person were feeling game-weak, but budget-strong, and didn’t want to pay real hard earned money for new objet d’art for their virtual cocktail table but could wait to get the next diamond fill, what are they to do? (And, you might be asking yourself, what does any of this have to do with doing social research?).

Well, there’s another option to get diamonds: complete online surveys. So, to justify lying on the couch for hours on a Saturday night in the interest of social science, I conducted some field work to assess the research methods being used in the wilds of the game app-universe. What I can tell you, is that no one should be trusting the data coming out of these methods.

I answered about 3 bazillion of these things and found most of them pretty dumb, poorly constructed, and meaningless. The majority are market research for companies, where they’ll want to know how likely you are to recognize a certain brand or a logo, and whether or not you are a decision maker in a company. Stuff like that. A lot of them kick you off if you don’t fit into their desired population, usually leaving a game player with a measly 15 diamonds in payment. Sometimes, I would have spent quite a long time to get to the point where they kicked me off b/c they set up all kinds of trick question to try to make sure people aren’t just answering randomly. Like they’ll ask you for your birth date, then three pages later, they’ll ask how old you are. You get kicked out if they don’t match.

They seem to underestimate the greater rewards for lying in these games. Every time you start a new survey, you have to go through the same annoying round of testing questions. If you get through these, the 15 diamonds will increase exponentially to hundreds and sometimes thousands of diamonds. This makes it wise for a person to be at least consistent in their lies to trick the system. One might, hypothetically, always use the same wrong birthday on all of these.

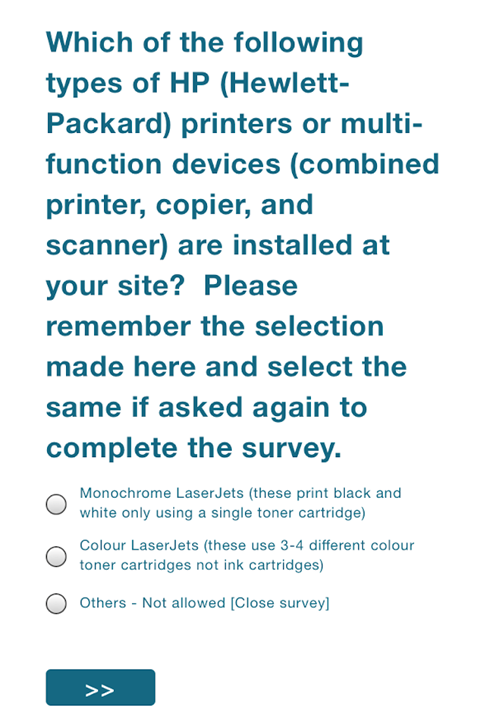

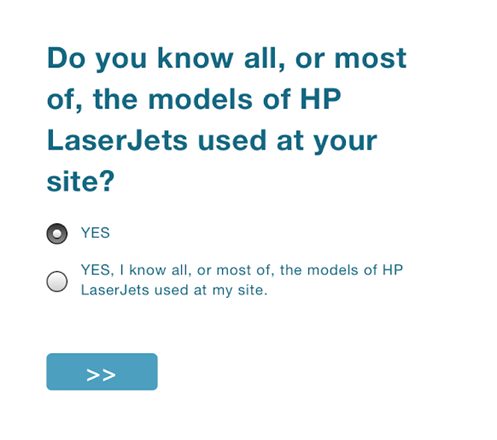

Some are so poorly constructed that they tell the user which responses will get you kicked off:

If you’re reading carefully, you know not to select “others” since that would lead the survey to close. Others were so badly constructed as to make no sense, such as this item in the same HP survey:

Hmmm… yes or yes? I guess my answer is yes.

I pretty much just laughed at these in my academic arrogance, thinking to myself, oh those corporate researchers need to go back to school where they can learn how proper research is carried out… *chortle, chortle*

Until, I started to stumble onto some academic studies. At first, I was like, okay, not really a random sample, but okay, maybe it’s not so bad. There was one from the University of Toronto about the then upcoming federal election in Canada (I managed to answer that one twice) and another from somewhere else about I don’t remember. They were questionable, but I felt an academic duty to answer them more honestly than I did in the market-research surveys I answered (for research purposes, of course). I may have indicated in one of the latter that I was in fact a senior vice president in charge of printer purchasing decisions for a company with over 100,000 employees (they TOLD me how to click! don’t judge me… fine, judge me, whatever). As the night wore on, I had sunk significant time into my plan of getting game-rich on diamonds research and hadn’t earned that many diamonds and was getting kind of annoyed at the number of times I was kicked off after spending a good 20 minutes trying to answer honestly about all the kids in my home and how we make decisions about laundry detergent or whatever. Eventually, my persistence paid off, when I hit the mother lode on an academic survey promising so many diamonds. I could already see the future sad clown painting hanging on the walls of my virtual urban loft grant I was going to write about how to better collect online data.

It started out innocently enough:

Survey: Do you have children between the ages of 14-17 in your home?

Me: Yes, indeed!

Survey: Are they home now?

Me: They sure are!

Survey: Would they be willing to answer some questions?

Me: … *thinking: Um, in general, probably, I mean, they’re curious, right?*…. *clicks yes*

Survey: Can you hand your device to the teenager in your home?

me: … *thinking* …

I may have meekly called out to the void between me and them in their rooms “kids? anyone want to fill out a survey?”

*I know them so well, what’s the difference between their answering and my answering?*

clicks: yes

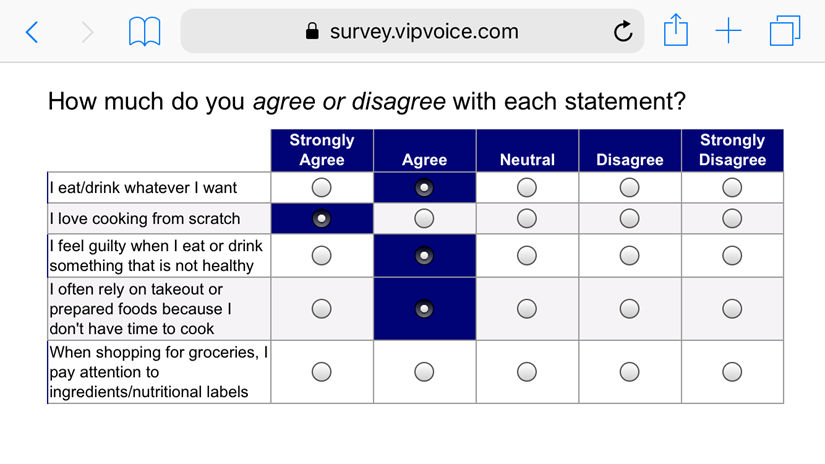

So, I began to answer as though I was a teenager in my home. Which at first was fine, whatever and I started to feel less guilty for lying since the survey was poorly designed in some ways, such as with this one:

Those questions are just weird to ask a teenager. “I love cooking from scratch?” Okay, I actually did as a teenager, I’ll give you that, but that’s only because my parents didn’t feed me.

So, I started to think maybe this was just a pilot study to test the validity of the questions. That’s not a bad thing to do with a non-random internet sample. You could use people to help you identify weaknesses in your survey… far more expensive than just handing it out to some students or people you know, but fine, maybe they were just doing their due diligence.

Then the survey went on to be far more focused on one topic in particular, vaping, which is something I know very little about. I don’t vape and I don’t really care much for it or about it so I don’t know the brands or what’s good or bad and I REALLY don’t know what teenagers know about it. So my answers started to get really wonky and just probably wrong. But again, I’m like whatever, it’s maybe a pilot study…. until… the next Monday…

Having forgotten about my Saturday night “research” binge, I’m innocently driving to work, listening to CBC radio when I hear a news item about a recent study published in a major journal about teenagers’ awareness of vaping products. I freeze in my seat (well, I was driving, so I wasn’t moving my body, but if I were moving, I would have frozen). I may have started to sweat a bit and turned up the volume. They went on to discuss the findings of a research study by the same guy who was listed on the consent form at the start of the survey I had completed not two days before. As soon as I got to my office, I started googling. I discovered that the researcher on the radio had published in a million journals and in GOOD journals. I thought, NO, it can’t be that these journals would publish this! They must know that anyone can answer these surveys! There’s no accountability or way of knowing who is answering them. They wouldn’t! But they did. It said right in the abstract:

… and then they gave more detail in the methods section:

Now, that study was published before I carried out my Saturday night research, but according to the researcher’s website, this is part of a larger series of studies. Who knows who answered the survey? I still feel shame about lying on a survey but what proportion of the world’s population would feel similarly? And even if YOU are a better person and would never lie and are virtuous and holy and good and I should simply be lumped in with unethical users of online games, that means that any online surveys are therefore even further biased in favor of us heathens! Perhaps I personally should have known better, but I really do not think that most people answering these surveys are deeply aware of the nuances of survey research.

This is one of the many reasons why I tend to trust only data from Statistics Canada or other official statistical agencies where non-response can at least be assessed relative to a national Census and where there is no overly alluring prize being dangled to encourage people to misrepresent themselves. This is also why I reject critiques of qualitative research for being too “biased” or not representative. Just because there is a statistic attached to a finding does not mean that the findings mean anything no matter how well written the paper is or how well the data were analysed. The results may actually be representing the beliefs of an exhausted middle-aged working mother, trying to wind down after an exhausting end of term. Hypothetically, of course.